Welcome to SELMA3D 2025: SSL for 3D light-sheet microscopy image segmentation¶

Welcome to SELMA3D 2025: SSL for 3D light-sheet microscopy image segmentation¶

- [2025-09-11] The final test phase is open.

- [2025-08-02] The preliminary test phase is open.

- [2025-06-26] The training set has been released. Join our challenge and visit the dataset page for more information.

Background¶

Background¶

In modern biological research, the ability to visualize and analyze complex structures within tissues and organisms is crucial. Light-sheet microscopy (LSM), combined with tissue clearing and specific staining, offers a high-resolution, high-contrast method to observe diverse biological structures including cellular and sub-cellular structures, organelles and processes, across diverse samples [1]. Tissue clearing techniques render inherently opaque biological samples transparent, allowing light to penetrate deeply into the tissue [2] and imaging reagents (e.g., fluorophores or antibodies), while preserving their structural integrity and molecular content. Various fluorophores or antibodies can be employed to selectively stain specific biological structures within samples and enhance their contrast under microscopy [3]. After tissue clearing and staining, LSM provides rapid 3D imaging of intricate biological structures with high spatial resolution, offering valuable insights into various biomedical fields, such as neuroscience [4], immunology [5], oncology [6] and cardiology [7].

Figure 1: Depth color map of the entire mouse body stained with alpha-SMA, which highlights arteries [13].

|

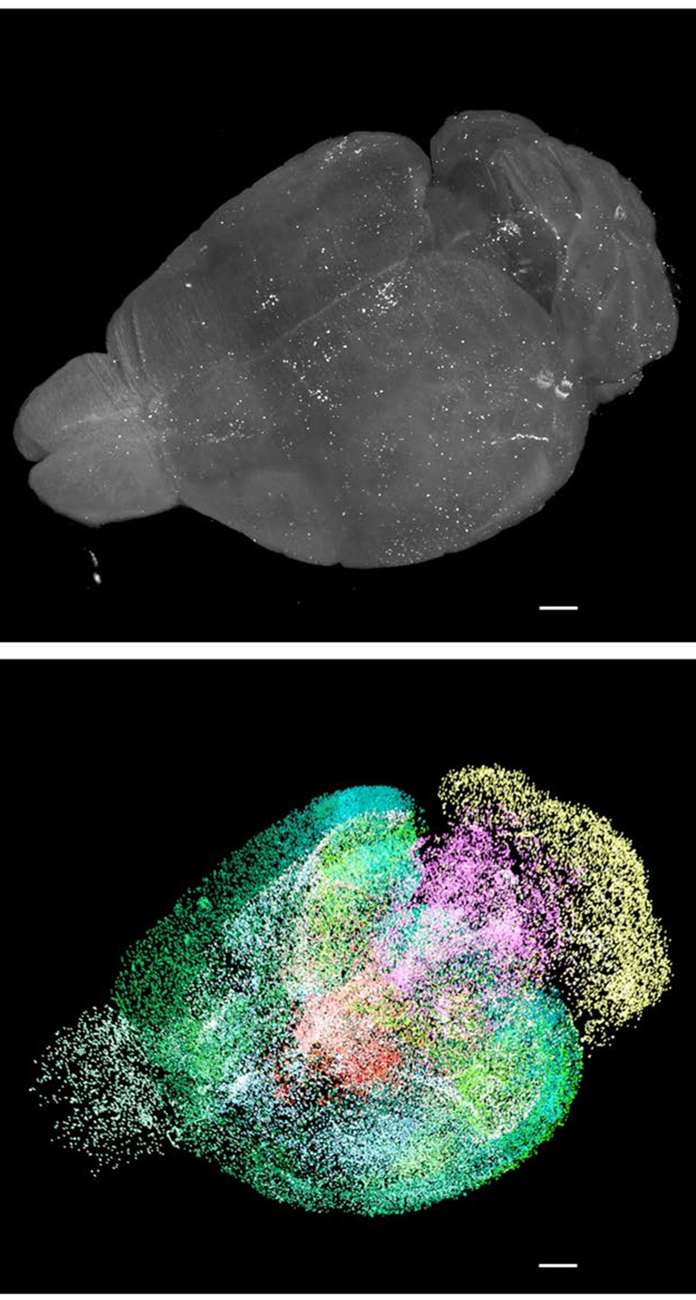

Figure 2: 3D visualization of a whole raw light-sheet image stack of c-Fos+ cells and its segmentation by a custom deep-learning model [14].

|

Automated image analysis approaches enable scientists to extract structural and functional, cellular and sub-cellular information from LSM images of various biosamples at an accelerated pace. To analyze LSM images, segmentation plays an essential role in identifying different biological structures [8]. For large LSM images, manual segmentation is time-intensive, with individual images containing up to 10000^3 voxels. As a result, there is a growing demand for automatic segmentation methods. Recent strides in deep learning-based segmentation models offer promising solutions for automating the segmentation of LSM images [9-10]. While these models achieve performance comparable to expert human annotators, their success largely relies on supervised learning, which requires extensive, high-quality manual annotations. These models are usually task-specific, designed for particular structures, with limited generalizability across different applications [11]. Therefore, the widespread adaptation of deep learning-based segmentation models is constrained, as the annotation for every specific LSM image segmentation task requires experts with domain knowledge.

It is crucial to develop generalizable models capable of serving multiple LSM image segmentation tasks. Self-supervised learning offers significant advantages in this regard, as it allows deep learning models to pretrain on large-scale, unannotated datasets, thereby learning useful and generalizable representations of LSM image data. Subsequently, the model can be fine-tuned on a smaller labeled dataset for specific segmentation tasks [12].

[1] E.H.K. Stelzer, F. Strobl, B. Chang, et al. Light sheet fluorescence microscopy. Nature Reviews Methods Primers 1(1): 73, 2021 Nov.

[2] H.R. Ueda, A. Ertürk, K. Chung, et al. Tissue clearing and its applications in neuroscience. Nature Reviews Neuroscience 21(2): 61-79, 2020, Jan.

[3] P.K. Poola, M.I. Afzal, Y. Yoo, et al. Light sheet microscopy for histopathology applications. Biomedical engineering letters 9: 279-291, 2019 July.

[4] H.R. Ueda, H.U. Dodt, P. Osten, et al. Whole-brain profiling of cells and circuits in mammals by tissue clearing and light-sheet microscopy. Neuron, 106(3): 369-387, 2020 May.

[5] D. Zhang, A.H. Cleveland, E. Krimitza, et al. Spatial analysis of tissue immunity and vascularity by light sheet fluorescence microscopy. Nature Protocols: 1-30, 2024 Jan.

[6] J. Almagro, H.A. Messal, M.Z. Thin, et al. Tissue clearing to examine tumour complexity in three dimensions. Nature Reviews Cancer, 21(11): 718-730, 2021 July.

[7] P. Fei, J. Lee, R.R.S.Packard, et al. Cardiac light-sheet fluorescent microscopy for multi-scale and rapid imaging of architecture and function. Scientific Reports 6: 22489, 2016 Mar.

[8] F. Amat, B. Höckendorf, Y. Wan, et al. Efficient processing and analysis of large-scale light-sheet microscopy data. Nature protocols 10: 2015: 1679-1696, 2015 Oct.

[9] N. Kumar, P. Hrobar, M. Vagenknecht, et al. A Light sheet fluorescence microscopy and machine learning-based approach to investigate drug and biomarker distribution in whole organs and tumors. bioRxiv 2023.09.16.558068.

[10] M.I. Todorov, J.C. Paetzold, O. Schoppe, et al. Machine learning analysis of whole mouse brain vasculature. Nature Methods 17: 442-449, 2020 Mar.

[11] Y. Zhou, M.A. Chia, S.K. Wagner, et al. A foundation model for generalizable disease detection from retinal images. Nature 622: 156–163, 2023 Sept.

[12] R. Krishnan, P. Rajpurkar, E.J. Topol. Self-supervised learning in medicine and healthcare. Nature Biomedical Engineering 6: 1346-1352, 2022 Aug.

[13] H. Mai, J. Luo, L. Hoeher, et al. Whole-body cellular mapping in mouse using standard IgG antibodies. Nature Biotechnology 42: 617–627, 2023 July.

[14] D. Kaltenecker, R. Al-Maskari, M. Negwer, et al. Virtual reality empowered deep learning analysis of brain activity. Nature Methods(21): 1306–1315, 2024 April.

Objective¶

Objective¶

SELMA3D aims to benchmark self-supervised learning for LSM image segmentation tasks. Participants will be provided with a training dataset consisting of two parts. The first part contains a vast collection of unannotated 3D LSM images from mice and humans for model pretraining. The second part includes annotated cropped patches from 3D LSM images for model fine-tuning.

In SELMA2024 edition, we categorized biological structures frequently studied in LSM images into two main types based on morphology: tree-like structures, e.g. vessels and microglia, and spot-like structures, including cell nuclei, c-Fos+ cells and amyloid-beta plaques. Participants were asked to develop a universal self-supervised learning approach that can benefit segmentation of both types of structures. The top performing teams achieved Dice scores exceeding 70% for both structure types. However, the results obtained by different participants suggested a single self-supervised learning strategy struggles to consistently enhance feature learning for both types simultaneously.

This year, we redefine the classification of biological structures into two categories: isolated structures and contiguous structures. Isolated structures refer to distinct, spatially separate components without physical connections, for example cell nuclei, c-Fos+ cell, amyloid-beta plaques and microglia. In contrast, contiguous structures highlight the physical continuity between parts of a structure that are connected without interruption, for example vessels and nerves. Based on this classification, SELMA3D 2025 challenge will be divided into two tasks:

- self-supervised segmentation of isolated structures in 3D light-sheet microscopy images

- self-supervised segmentation of contiguous structures in 3D light-sheet microscopy images